The Deep Weirdness of Artificial Intelligence

Part One in a series of articles that break down the AI big bang.

Welcome to The Owner’s Guide to the Future. This week I will share a series of analyses I’ve written about what transpired in the field of artificial intelligence recently.

The pace of new products and fresh announcements has been intense. The torrent of news has been accompanied by dramatic reactions that range from euphoria to fear.

Let’s break this down into parts so that we can gain a better understanding of what’s driving the frenzy.

Here’s an overview.

The month of March 2023 began with wonder and optimism. Pundits celebrated the “big bang of generative artificial intelligence.” The World Economic Forum heralded “the golden age of AI.”

In a blog post titled The Age of AI has Begun, Bill Gates wrote: “The development of AI is as fundamental as the creation of the microprocessor, the personal computer, the Internet, and the mobile phone. It will change the way people work, learn, travel, get health care, and communicate with each other. Entire industries will reorient around it. Businesses will distinguish themselves by how well they use it.”

Gates continued: “Any new technology that’s so disruptive is bound to make people uneasy, and that’s certainly true with artificial intelligence.”

By the end of March, the euphoria had vanished. More than 1000 technologists, including several prominent artificial intelligence (AI) researchers, had signed a petition demanding a 6-month pause in research of advanced large language models (LLMs) for AI. Since publication, the number of signatories on the petition has risen to 26,000.

Other critics went further, arguing that AI represents an existential threat to humanity comparable only to nuclear weapons. These doomsayers call for a total moratorium on further research.

But the leading developers of artificial intelligence systems refuse to comply. They remain committed to deploying ever-bigger LLMs as quickly as they can. Prominent scientists at the leading AI firms dismiss the criticisms as irrelevant or poorly informed. Meta’s Yann LeCun claims that the risks are “manageable” and dismissing the potential threat of human extinction as “infinitesimal” while the potential benefits of AI are “overwhelming”.

This leaves most of us confused. Should we be worried about AI? Is it really an existential threat? Or is it a great business opportunity? Or both?

To answer these questions, we need clarity. In this week’s series of short reports, we will examine what is behind the fear-mongering, the hype, and the deep weirdness that surround artificial intelligence.

I will start at the beginning with the drivers of the exponential change that speeds AI deployment. Then, in the next four newsletters, I will consider aspects of the technology itself and the competitive market dynamics that make this chapter of technology evolution so unsettling.

Let’s tackle this subject by thinking about one weird thing at a time.

Weird Thing #1:

We are in the “knee of the curve” of exponential change.

For 25 years, futurists like Ray Kurzweil have been reminding anyone who would listen that humanity was about to experience something unique and unparalleled.

Kurzweil explained that certain technologies (particularly AI) are advancing at an exponential rate.

This process had begun slowly but it was accelerating. Moreover, the rate of change was increasing. This created a positive feedback loop whereby increasing innovation drove further acceleration.

Eventually, he said, the increasing pace of innovation would reach “the knee in the curve” of exponential improvement, when the rate of new progress would increase so sharply that it could destabilize society and break brittle institutions.

That seems to be where we find ourselves today. Right at the point when the pace of change begins to skyrocket upward.

People are beginning to notice the bewildering rate of change firsthand.

Even though we were warned, nobody expected the dawn of the AI Era to be so weird. Much of the weirdness has to do with the exponential increase in the pace of innovation.

One aspect (but hardly the only one) that makes the advent of consumer-facing artificial intelligence apps so strange is the rapid succession of new product launches.

March 2023 was marked by a series of major achievements. Each day, several new product releases were announced. The most notable were:

OpenAI:

· released GPT-4, a significant upgrade to the GPT-3 conversational AI app.

· unveiled plug-ins for ChatGPT that enable the AI to extend to many more consumer-facing services including Kayak and Expedia (for automated travel planning), OpenTable (for help booking restaurant reservations), Instacart, Zapier, Wolfram and several more. This will expose tens or hundreds of millions of users to ChatGPT and will likely speed up the adoption of consumer-grade AI.

Microsoft:

· announced the integration of GPT-4 into Office suite of apps, to potentially 1.2 billion users

· added AI Copilot to Office 365 suite to enable users to generate images and text

· introduced GPT-4 in Azure OpenAI Service

· added Bing Image Creator to the new Bing AI-powered search engine

· released VLMaps for robot navigation

Google:

· added generative AI to Workspaces.

· released the PaLM API, a safe and easy way for developers to incorporate Google LLMs into their apps, and a tool called MakerSuite which allows users to prototype ideas.

· opened a waitlist for user access to Bard, their response to ChatGPT

· released the 2.12.0 update to TensorFlow.

But those are just the announcements from the leading companies. Taken together, they represent fewer than half of the innovations that were introduced in March.

Other AI news last month included:

· Stanford University released Alpaca 7B, a low-cost open source LLM that will enable an individual user to run a chatbot on a laptop for less than $1000.

· The source files for Meta’s LLaMA large language model were posted in public.

· MidJourney 5.0 introduce high fidelity generative imagery.

· New AI tools and generative AI projects were announced by Nvidia, Adobe, Unity, GitHub, Canva, Opera, Anthropic, PyTorch, MidJourney and many other companies.

· More than 100 new AI apps were introduced in a single week.

Key Insight: In artificial intelligence, the pace of innovation is accelerating. The underlying technologies that enable exponential growth have reached a stage of maturity sufficient to foster rapid improvement in many areas at once. Open source technology opens the aperture for innovation to more participants. This, too, increases the tempo. There is no sign that this intense pace will slow.

If this technology really is progressing at an exponential rate, then it will continue to accelerate to a point that exceeds our comprehension. That may sound like an exaggeration but it is a fact that humans are notoriously bad at anticipating an exponential growth trend.

As the next section explains, this trend is the answer to the question “why is this happening now?”

Weird Thing #2:

The paradox of declining cost and increasing performance.

Large language models (LLMs) based on the transformer architecture for deep learning are a fairly recent innovation. Just six years ago, a group of researchers at Google published a paper that introduced a new architecture for neural networks called “transformers” that are used to train LLMs.

OpenAI deployed its first transformer algorithm, known as a “generative pre-trained transformer”, or GPT-1, in 2018.

The timing was no accident: it could not have happened much earlier. That’s because three conditions must be satisfied to make LLMs function at scale:

1. The availability of vast quantities of data

2. Optimized algorithms

3. A massive increase in processing power

By 2018, these elements were ready. The four billion people using the Internet (at that time; today, it’s more like 6.5 billion) were generating huge amounts of data; the transformer algorithms had been written; and steady improvement in microprocessors had made a particular type of computing power affordable at sufficient scale.

Since 2018, graphical processing units (GPUs) have continued to improve by a factor of 500x. That’s why LLMs are suddenly everywhere.

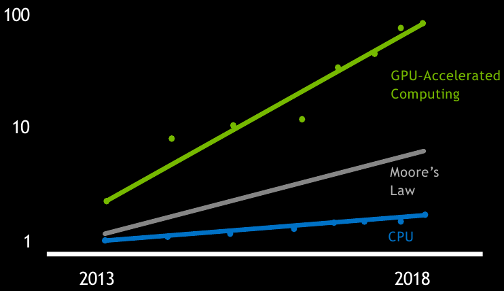

The price-performance of graphical processing units (GPUs) improves at a faster rate than Moore’s Law, the 1970s-era observation that the number of transistors on a microprocessor will double every 18 months.

(And yes, when we plot that increase on a chart, year over year, it matches the exponential growth curve described above.)

What Moore’s Law means in practice is that the cost of computing power declines at a steady, predictable rate: every 18 months, a dollar can purchase twice as much processing power.

That rate of rapid improvement is now happening to the chips that power LLMs.

We’ve already seen what happens when computing power gets cheaper and more powerful. For the past two decades, Moore’s Law made it possible for information companies to grow to unprecedented size by riding the cost curve down while broadband networks enabled them to scale up the distribution of services to a growing number of phones and computers worldwide. The result was the world’s first planetary-scale companies producing information products at near-zero marginal cost, achieving valuations that ranged from $1 to $3 trillion.

Potentially, AI can accelerate this process. Whichever company or app or platform can benefit from this trend will rocket ahead. This is why the tech giants are positioning for the greatest landgrab in technology history.

In previous decades, growth in compute power was driven by steady improvement in the primary chip on a PC, the central processing unit (CPU).

For five decades, Moore’s Law proved to be a remarkably accurate at forecasting the rate of improvement in CPUs. However, in recent years, price/performance improvement on CPUs stalled as chip designers confronted basic physics: that’s because there is a finite limit on the number of transistors that can be packed onto that tiny wafer of silicon.

Naturally, some wags proclaimed that Moore’s Law is dead.

But Moore’s Law is not dead. The trend remains in full effect on a different kind of chip, the graphical processing unit (GPU).

Originally invented to accelerate computer graphics for PC gaming, GPUs have a significantly different architecture from CPUs. Instead of single-threaded multitasking (which is what a CPU does so brilliantly), a GPU computes one big task by dividing it into a set of smaller tasks that can be computed concurrently. This is known as parallel processing. GPU chip architecture is optimized for parallel processing. This happens to be precisely where there is significant opportunity for further performance gains. Jensen Huang, the CEO of Nvidia, forecasts that the GPUs will continue improve by a factor of 500x in price/performance during the next five years.

The upshot? The cost of parallel processing will continue to fall as the power of the chips increases. Basic economics tells us that when the cost of something falls and the quality improves, we tend to use more of it.

Any business that depends on parallel processing will benefit from declining costs that enable them to scale profitably. AI companies intend to leverage this trend in order to grow fast.

We are about to witness a repeat performance of the incredible growth that characterized the information economy from 1980 to 2020, but this time it will occur in domains that require parallel processing on GPUs instead of single-threaded processing on CPUs. Artificial Intelligence, particularly deep learning, will be the biggest beneficiary of this bounty. LLMs require massive amounts of parallel processing power. The declining cost of parallel computation means we will continue to see significant advances in scale as the economics of artificial intelligence improve for the foreseeable future.

More powerful processors also speed up the rate of progress. Years passed between the release of the first LLM and the next version. Then the interval shrank to a full year between GPT-2 and GPT-3. Now the upgrades occur within a single calendar year. GPT-5, the successor to GPT-4 is scheduled for release before the end of 2023. Less than 8 months will have elapsed between generations 4 and 5.

Finally, the advent of generative AI that can write software automatically will also contribute to the acceleration of innovation and growth. The cost of computer programming is likely to plummet by 2030.

As costs fall and quality improves, we will consume more. AI will be used everywhere.

Welcome to the knee of the curve. Thanks to ever-increasing power in GPUs, the pace and scale of innovation in AI will continue to accelerate.

And at the same time, the algorithms are becoming more computationally efficient: they will be likely to generate better results with smaller datasets and less environmental impact. In turn these changes will drive down cost even more, which will increase usage and further accelerate innovation.

We have entered the era when artificial intelligence will continue to become cheaper, more powerful, and more abundant. This is a powerful feedback cycle.

Key Insight: The price/performance of GPUs will increase by a factor of 500x during the next five years. As parallel processing becomes cheaper and more powerful, it will influence the trajectory of technology innovation across many sectors. Computing tasks that benefit from parallel processing, such as deep learning, pharmaceutical design, automotive design, real-time 3D graphics, astrophysics and weather forecasting, are poised to benefit from GPU improvement. In just a few years, artificial intelligence will be ubiquitous.

This is the first article in a series called “The Deep Weirdness of Artificial Intelligence.” During this week, four more articles in this series will be released.

If you’ve enjoyed reading this newsletter, why not share it with a friend?

Thanks for reading The Owner's Guide to the Future! Subscribe for free to receive new posts and support my work.